Discover some great news coming from the AR industry, with rumors or announcements over AR/MR headset from Samsung, Apple and Nreal. 2021 will be an interesting year for the AR market and its adoption by users, enterprises or consumers. See more by reading this new article, or you can read the #3rd recap what’s new in AR/XR or #4th recap what’s new in AR/XR.

Nreal will launch a mixed-reality headset for enterprises

After the launch of their Light AR glasses for consumers (read our review of the Nreal Light) in Asia, and soon in Europe and in the U.S, Nreal will launch a new mixed-reality headset targeting enterprise users. The AR headset, embedding different designs and characteristics than the Nreal Light model, is expected to launch in 2021.

The Nreal Enterprise Edition tethers to a computing unit and battery pack for longer-lasting power (contrary to the Nreal Light with the compute directly in the smartphone). The AR headset will also include eye-tracking and gesture recognition technologies, allowing hands-free control. The company is targeting enterprise customers in manufacturing, retail, tourism, education, or logistics markets.

It will be very interesting to see the sales and market penetration of such a device, that seems similar to the Microsoft Hololens 2 (see our review of Hololens 2) and targeting the same markets as Microsoft and Magic Leap.

Samsung concept videos leak what could be their future smart glasses

Like many other tech giants, Samsung is currently working on AR devices, and the recent leak shared on Twitter will not say otherwise. Videos of the possible “Samsung Glasses Lite” have been leaked, with no more information since. The glasses would probably be as light as Nreal Light or Oppo glasses.

The video is presenting some possible AR experiences, with a simulation of what the person can see through the smart glasses. It’s not clear what display technology Samsung is leveraging, but there are realistic details (like how the AR screen is almost a square and that the top edge of the lenses is blacked out to hide technical components), that increase the likelihood that this is a real product under development and not purely a concept.

Apple mixed-reality headset to have two 8K displays and eye-tracking technology, will probably cost $3,000

A recent report suggested that an Apple mixed-reality headset is on its way, and will be the most advanced VR headset available on the market. If Apple is known for being more focused on Augmented Reality, this leak concerns a mixed-reality device, which would be mostly VR but also integrating interactions with the real world.

The MR headset will be equipped with a dozen of cameras, along with ultra-high-resolution 8K displays and eye-tracking technology. It could be shipped at around $3 000, which is closer to Magic Leap or Hololens 2 prices than to Oculus ones. Surprisingly, that pricing suggests that it won’t be made for B2C but primarily for B2B users.

This MR headset could be used to increase productivity and reduce travel-related costs, remote collaboration, or hands-on training of new skills faster through new interactive methods.

Apple is also working on a pair of lightweight smart glasses designed to overlay virtual objects onto a person’s view of the real world. Probably that this device currently faces technological barriers, and that the MR headset will be the first to come.

Google Launches TensorFlow 3D Using LiDAR & Depth Sensors for Optimized AR Experiences

Google’s AI research team regularly creates tools that allow developers to take advantage of the 3D data generated by the LiDAR or Depth sensors embedded into the recent XR-enabled smartphones.

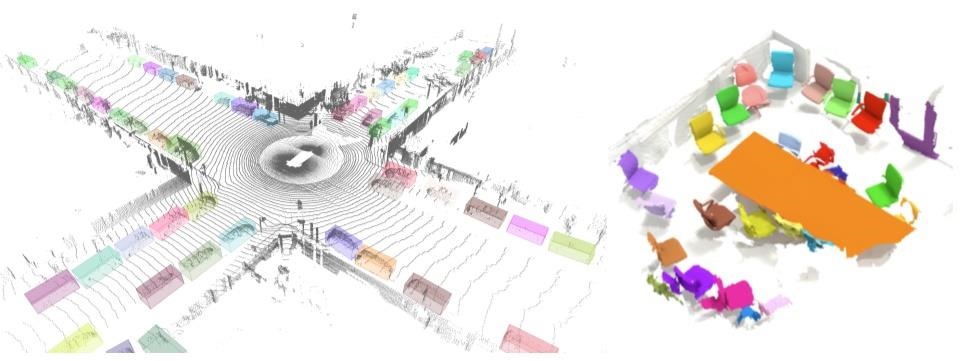

For instance, Google has recently added TensorFlow 3D (TF 3D), a library of 3D depth learning models, including 3D semantic segmentation, 3D object detection, and 3D instance segmentation, to the TensorFlow repository for use in mobile AR experiences.

First, the 3D Semantic Segmentation model enables apps to differentiate between the objects and the background of a scene, like with the virtual backgrounds on Zoom or Teams. Then, the 3D Instance Segmentation model identifies a group of objects as individual objects, as with Snapchat Lenses that can put virtual masks on more than one person in the camera view. Finally, the 3D Object Detection model takes instance segmentation a step further by also classifying objects in view.

Example of the 3D object detection model (left) and 3D instance segmentation model (right) via Google

It’s also not the first time Google has leveraged depth sensor data for AR experiences. Google’s Depth API for ARCore enables occlusion, with the ability for virtual content to appear in front of and behind real-world objects, for mobile apps via standard smartphone cameras.

Sources:

Nreal unveils enterprise edition of mixed-reality glasses – Venture Beat

Samsung concept videos leak ‘Glasses Lite’ and ‘Next Wearable Computing’ AR vision – 9to5 Google

Apple mixed-reality headset to have two 8K displays, cost $3,000 – 9to5 Mac

Google Launches TensorFlow 3D Using LiDAR & Depth Sensors for Advanced AR Experiences – Next Reality