Get the latest insights about Apple’s recent work on Augmented Reality with Immersiv’io’s iOS developer and huge Apple Fan Brian. You will find the recent announcements following Apple WWDC 2021 and other cool comments on ARKit and the power of LiDAR.

When ARKit was introduced back in 2017 with iOS 11, it was a big step forward. All the heavy lifting needed to create an augmented reality experience – from analyzing the scene frame per frame to monitoring the device’s movements and orientation – was done out of the box by iOS. And contrary to how some AR experiences performed back then, Apple’s ARKit framework worked really well! In fact, it was probably the first time I saw virtual objects positioned in the real world like they were really part of it. An object positioned on the floor was literally on the floor. That can seem obvious nowadays, but back then, it was HUGE!

Since then, ARKit kept growing year after year, becoming more robust and feature-packed. And with LiDAR coming to the newest devices, AR experiences benefit from even more accurate positioning and precise depth data. A couple of weeks ago, Apple unveiled what’s coming next to AR at WWDC21. And even if it doesn’t seem like there was much, there was actually more than what we might think if we read between the lines…

Discover the power of ARKit

The first thing ARKit brought to developers was built-in plane detection. First horizontal plane detection in the first version of ARKit, and then vertical plane detection. Objects can be positioned on or relative to those planes.

Beyond those « AR basics », ARKit brought a lot of cool features, starting with face tracking. Using the True Depth Camera (the same tech used for FaceID), and now only the Neural Engine with iOS 14, it allows to get a 3D mesh geometry of the user’s face, tracked in real-time, and can track up to 3 different faces.

This enables different features, like overlay 2D textures on the user’s face, like face tattoos, that stay anchored to the user’s face, and move with its facial expressions; attach 3D content to the user’s face, like hats or glasses; or even get access to facial expressions (Blend Shapes) to animate a character (like an Animoji).

Beyond face detection is body detection, which works pretty well. ARKit can detect a person’s body and motion. From there, we can position an avatar in space and animate with the detected movements. It’s also possible to record such movements and export them for later use.

It’s worth noting that ARKit can also detect given images or real world 3D objects that you would have previously scanned. It allows to position objects around or on those images and objects. The downside is that ARKit can only detect the precise given image, or the exact same object that has been scanned. But it could still be used for very precise use cases, bringing fun and instant AR experiences.

An other very cool thing ARKit brought over the last years are collaborative sessions. It allows the users’s devices to build a collaborative world map and share ARAnchors to create a multi-user experience (with up to six users). No Internet connection is required, and the data sharing can even be done automatically completely out of the box thanks to RealityKit’s synchronization service.

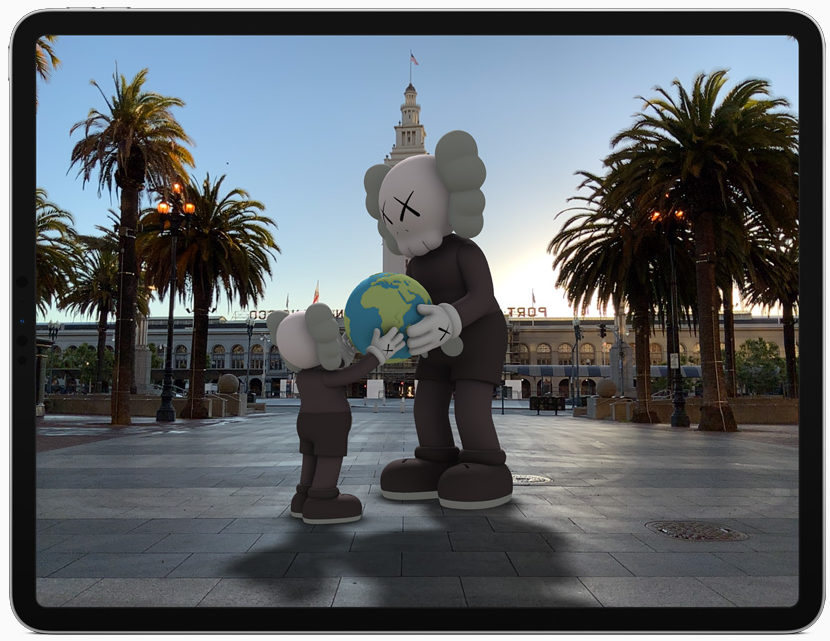

Now last but not least are the Location Anchors that are, according to me, one of the biggest pillars of Apple’s future AR world. Introduced at WWDC20, it is a powerful way to place AR content in the real world based on geolocation.

Apple uses the device’s geolocation and the 3D feature points of the buildings surrounding the user to determine exactly where the user is in the real world, being able to create an ARGeoAnchor at the right position, orientation and altitude in the real world. This feature is only available across multiple US cities for the moment and coming to London with iOS 15, but we might see it coming to many more cities very soon. Hopefully before iOS 16!

Hello, LiDAR

Now a tool that brought something pretty big to AR on Apple devices is the LiDAR Scanner, which was first added to the 2020 iPad Pro, followed by the iPhone 12 Pro and iPhone 12 Pro Max.

The way the LiDAR works is by measuring the light’s time of flight. It projects infrared light with one sensor, and measures the time for the reflected light to return to its second sensor, bringing the ability to create a precise mapping of the surroundings, unlocking even greater possibilities for AR experiences.

The LiDAR can mainly be used with two APIs. The first one is a Scene Reconstruction API, offering a precise 3D mapping of the surroundings, including the mesh geometry of surrounding objects, and the second one is the Scene Depth API, allowing to get a depth map containing the depth for each pixel in each frame captured by the camera.

But LiDAR is not only used for those two APIs, it also enhances the whole ARKit framework for free, by enhancing its key features, bringing more precision overall in object placement, or by calculating distances.

It’s worth noting that LiDAR also enables what Apple calls “instant AR”. ARKit doesn’t need to wait to have enough visual input in order to start world tracking. It gets instant depth data from LiDAR and can render tracking right away, bringing an ultra-fast AR experience that does not require the user to scan its surroundings before the AR experience can start anymore.

Now that we covered the power of ARKit and the LiDAR Scanner, what did Apple bring us at WWDC21?

Augmented Reality at WWDC21…

For AR enthusiasts, it’s true that WWDC21 kind of lets us begging for more. ARKit 5 is only bringing Location Anchors to London, improving body tracking and adding face tracking support to the new iPad Pro’s front-facing wide camera. On the other hand, RealityKit 2 got a lot of improvements, with custom shaders, dynamic assets, or character controller.

There is also the introduction of very cool App Clip Code anchors. App Clips, introduced at WWDC20 with iOS 14, allow developers to create small versions of their apps, weighing less than 10MB (limit enforced by Apple), that can be downloaded instantly by scanning an App Clip Code, without going through the App Store.

App Clip Code anchors allow ARKit to detect an App Clip Code to place content around it. It enables instant AR experiences, for example, to preview a plant that would grow from the seeds in a plant shop, and so much more.

Now you might think I forgot about the biggest AR-related announcement of WWDC21, which is obviously the 3D object capture, unleashing the power of the M1 chip on Mac to create very high-quality 3D models of real-world objects from pictures (not just iPhone pictures, but also DSLR or drone pictures). The results are pretty convincing and impressive, even with the first betas of macOS Monterey. This will unleash creativity and power great AR experiences to come.

… and beyond!

WWDC21 brought more to AR than we might think. If we look carefully, Apple paved the way with many different and diverse features to fuel its future AR world.

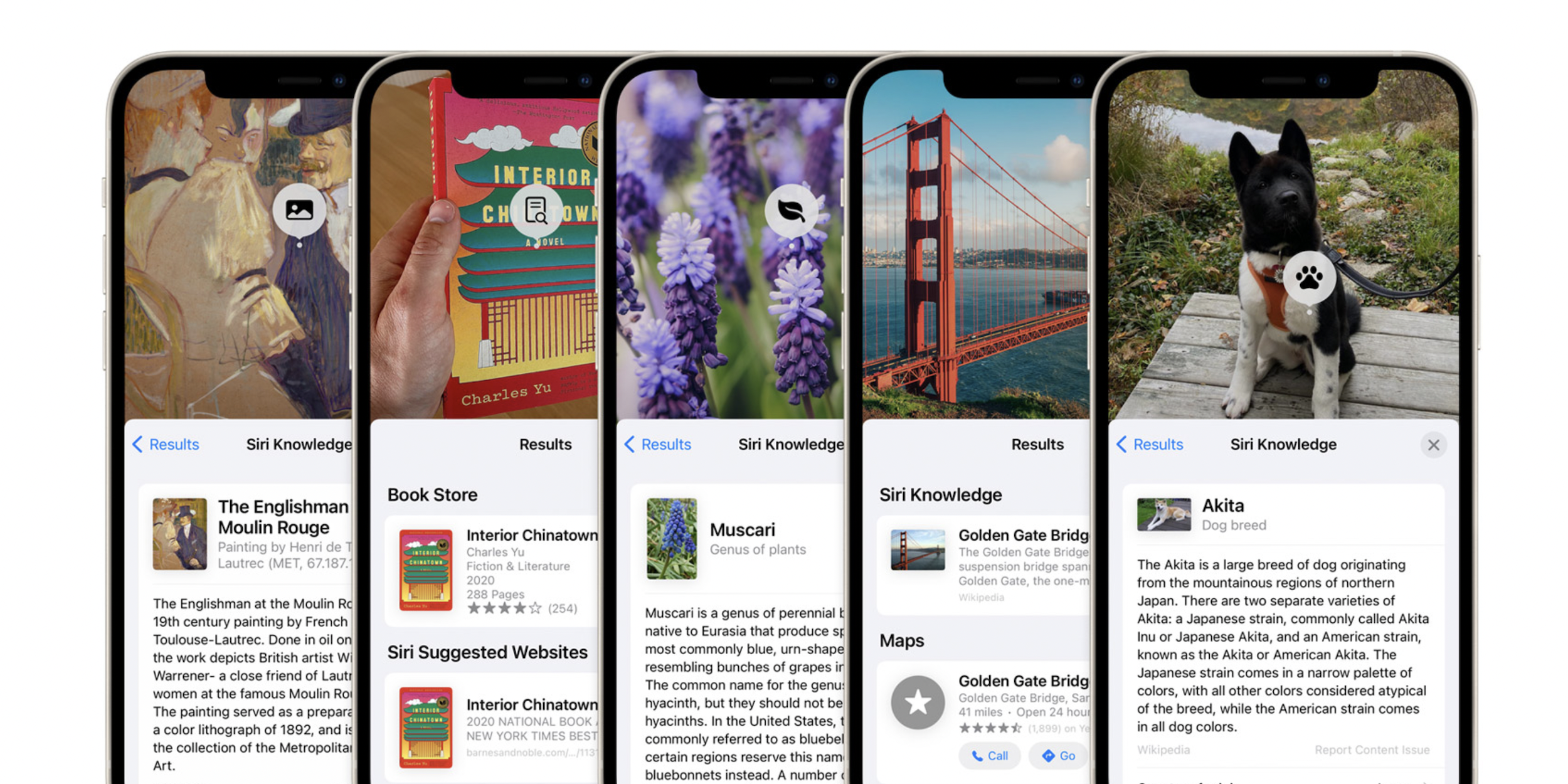

Let’s start with features like Live Text and Visual Lookup coming to iOS 15. Every iOS device with an A12 chip or later will be able to detect text on any picture, even live as keyboard input, or by opening the camera app and looking at random text. This allows for example to point your device to a business’s phone number on a piece of paper and just call them right away.

Visual Lookup is even cooler: iOS can automatically detect animals, plants, buildings, and even art (like paintings) in your photos, and even on the web while browsing. And the greatest thing is that those features are completely working on-device thanks to the Neural Engine.

Also, the Vision framework is getting even more powerful and allows to identify hand poses.

ShazamKit not only allows developers to detect music in their apps, but also to generate custom sound signatures from any sound for them to be detected in their apps, allowing to sync live content with an app. It’s all done on-device, and could power some very cool interactive experiences.

All those features taken separately are away from the scope of augmented reality, and might not seem linked to it at all, but taken together, they could empower deeply integrated AR experiences that would go well beyond anything we can imagine. They actually pave the way for an « AR world ».

For that to become reality, we would need some new kind of hardware to truly harvest the potential of augmented reality… Like Tim Cook said during Paris’ VivaTech conference in June, « We’ve been working on AR first with our phones and iPads, and later we’ll see where that goes in terms of products ».

Guessing « later » is now a lot closer to us than we might actually think…

This article was written by Immersiv.io’s Dev Lab, composed of experienced AR developers, creating and implementing AR applications thanks to the latest technologies and dev kit on the market.

Special thanks to Brian!